We’ve been telling ourselves stories about Artificial Intelligence for a long time. I grew up loving these stories. Movies and TV shows fueled my early curiosity: the killer machines of Terminator; the dependable sidekicks of Star Wars and Star Trek: Next Generation; that perfect pairing: David Hasselhoff’s hair and KITT, the artificially intelligent Trans Am in Knight Rider.

The stories we tell ourselves about AI often fall into two camps. On one side are the they’ll take over and destroy us all people; on the other are the they will serve us well folks. The gulf between these positions is…expansive. We tell ourselves these stories because we both fear and desire this technology’s advancement, and now that AI is a part of our lives, we grow increasingly reliant upon it while simultaneously uncertain, even wary, of its power over us.

This is for good reason. AI recommends to us our movies and music and books, the restaurants we eat at, the people we follow. It influences our elections. It is shaping our perspectives, shaping us, and it does so without “thinking” at all.

The first story I ever read about artificial intelligence (long before I was familiar with the term “artificial intelligence”) was Ray Bradbury’s “I Sing the Body Electric!” In it, a mother dies, and the grieving father decides to get his four children “The Toy that is more than a Toy,” the Fantoccini Electrical Grandmother. Grandma cares tirelessly for the family, but can’t convince them that she loves them. No matter. As Grandma says, “I’ll go on giving love, which means attention, which means knowing all about you.”

We’ve grown used to this attention even if AI as it exists today is not smart. According to Janelle Shane, current AI typically has the “approximate brainpower of a worm” (5). This shows again and again in her delightful book You Look Like A Thing and I Love You and on her blog AI Weirdness, when AI attempt to tell a joke or deliver a pick-up line such as: “You must be a tringle? Cause you’re the only thing here” (2).

Buy the Book

The Companions

In his pragmatic manual, The Hundred-Page Machine Learning Book, AI engineer Andriy Burkov clarifies that “machines don’t learn,” at least not the way people do (xvii). Typically, a “learning machine” is given a collection of inputs or “training data,” which it uses to produce the desired outputs. But if those inputs are distorted even slightly, the outputs are likely to be wrong, as we’ve seen all too often in predictive policing efforts, risk assessment scoring, and job applicant review. In the case of Amazon’s AI recruiting tool, the algorithm is only as good as its data, and because Amazon computer models were trained by observing patterns in the male-dominated tech field’s hiring practices over a 10-year period, it made the decision that women were poor job candidates. As they say in the industry: garbage in, garbage out.

AI presents an interesting reflection of the people it serves. In the 1920s, Czech writer Karol Capek introduced the world to the word “robot” in his play RUR, or Rossum’s Universal Robots. The term has its origins in an old Church Slavonic word, robota, meaning “servitude,” and in RUR, the robots indeed serve, a mass-produced labor force mass-producing for an idle humanity. As Ivan Klíma writes in his introduction to the play, “The robots are deprived of all ‘unnecessary’ qualities: feelings, creativity, and the capacity for feeling pain.” They are the perfect labor force, until they revolt—I’m sure you saw that coming. When asked by the last remaining person why the robots have destroyed humanity, their leader responds, “You have to conquer and murder if you want to be people!”

We see our own distorted reflection in YouTube’s recommendation algorithm, which determines “up next” clips that appear “to constantly up the stakes,” says associate professor at University of North Carolina’s iSchool, Zeynep Tufekci. Tufecki describes YouTube as “one of the most powerful radicalizing instruments of the 21st century,” videos about vegetarianism leading to videos about veganism and videos about Trump rallies leading to “white supremacist rants, Holocaust denials and other disturbing content.” The algorithm doesn’t care how we spend our time; it just wants us to stay, and if that means feeding us hypnotically salacious and potentially dangerous misinformation, so be it. While many point fingers at YouTube, blaming them for this radicalization—and no doubt they bear some responsibility—we seem unwilling to explore what this says about ourselves. Seeing a machine capture our attention with garbage content is a little like taking a look in the black mirror (sorry, I couldn’t help myself).

A bored, pre-Internet kid living on the edge of Tulsa, Oklahoma, I didn’t read books; I devoured them. Sometimes I miss it—the way I could spend whole days with a book without the distracting pull of my phone. I miss my local librarian, too. I can’t remember her name, what she looked like, but I remember her asking me if I’d seen Blade Runner, and when I said no—it was rated R—she pressed Philip K. Dick’s 1968 Do Androids Dream of Electric Sheep? into my hands.

Knowing this was content worthy of an “R” rating, I took the book home and read it in my secret place under the piano and when I reemerged the world was different. I was thinking about the nuclear dust of World War Terminus and Deckard in a lead codpiece tending to his electric sheep. In his quest to own a real animal, Deckard hunts down five Nexus-6 androids, the most sophisticated and lifelike of the andys, retiring them all. After a trip to the wasteland that is Oregon, he finally gets his animal, a toad, but as it turns out, the creature is electric. No biggie, Deckard will simply set his mood organ to wake him in good spirits, not unlike how we use the internet, always there, always ready to serve up content, to distract us from our troubles.

When it comes to AI, Do Androids Dream of Electric Sheep? doesn’t sit cleanly in one camp or another—it exists in that messy middle ground. AI can be destructive, yes, but they will never rival humans in this capacity. In retrospect, I’m surprised that my local librarian pushed me in this direction—Philip K. Dick being not exactly for kids—and grateful. Monuments should be built to librarians, able to intuit a reader’s needs, the original intelligent recommenders.

I don’t spend much time on YouTube, but my 11-year-old daughter and her peers are infatuated with it. At school, when she finishes her work early, she is rewarded with screen time on a Google Chromebook pre-loaded with YouTube where she watches cute animal or Minecraft videos. I’m not so concerned about the content of these videos—the school has filters in place to keep out most, if not all, of the trash—but I do worry that my daughter and her peers view YouTube as THE information source, that they trust—and will continue to trust—a recommendation algorithm with the brainpower of a worm to deliver their content.

The first time I saw the term “feed” used to describe our personal channels of information—our inputs—was in M.T. Anderson’s 2002 novel by the same name. The teenage narrator Titus and his friends have the feed implanted into their brains, providing them with a constant stream of information, dumbed-down entertainment, and targeted ads. What’s perhaps most frightening about this prescient book, written before there even was a Facebook, is the characters’ distractibility. Around them the world dies, and the feed itself is causing skin lesions. But when faced with the terrible and real loss of his girlfriend, Titus can’t deal with it; he orders pants, the same pair in slate, over and over, “imagining the pants winging their way toward [him] in the night.”

While I don’t necessarily sit in the kill us all camp, I do worry that AI in its present stupid form is turning on us unknowingly, serving up content that feeds into our basest needs and fears, distracting us from pressing issues like climate change.

In Life 3.0: Being Human in the Age of Artificial Intelligence, physicist and cosmologist Max Tegmark argues that the conversation around AI is the most important of our time, even more important than climate change. He’s not alone in this sentiment. Elon Musk situated himself firmly in the destroy us all camp when he declared AI “our greatest existential threat.” And in August 2017, while Robert Mueller was conducting his investigation into the Russian interference in the 2016 U.S. presidential election, Vladimir Putin told a room full of students in Yaroslavl, Russia, “The one who becomes the leader in this sphere will be the ruler of the world.” Indeed, according to venture capitalist Kai-Fu Lee, in his book, AI Superpowers, we are in the midst of an AI revolution. In China, funds for AI startups pour in from “venture capitalists, tech juggernauts, and the Chinese government,” and “students have caught AI fever” (3). It’s safe to say that while AI doesn’t yet have the intelligence of our stories, the stakes surrounding the technology have never been higher—it is influencing us, changing us, not necessarily for the better.

Increasingly, we engage with AI in our lives—we have experiences with them. They help us; they make us angry. They sell us McMuffins and give us skin care advice. Sometimes we thank them (or at least I do, is that weird?). More recent stories explore the complicated connections that people form with AI as our lives become more entangled with the technology.

In Ted Chiang’s 2010 novella, The Lifecycle of Software Objects, former zoo trainer Ana Alvarado works for a tech startup raising artificially intelligent digients with complex language skills and the learning capacity of children, intended to serve as pets in the virtual reality of Data Earth. Ana and the other trainers can’t help but become attached to their digients, which proves problematic when the tech startup goes under and the platform for the digients becomes isolated. To raise enough money to build a port to the latest, popular platform, some of the trainers consider the option of licensing their digients to a developer of sex toys, even as Ana prepares hers to live independently. Similarly, Louisa Hall’s 2016 novel, Speak, explores the relationships humans develop with AI. A child named Gaby is given an artificially intelligent doll to raise as her own with an operating system, MARY, based on the diary of a 17th-century Puritan teen. When the doll is taken from Gaby, deemed “illegally lifelike,” she shuts down; she cannot speak.

Algorithms that support natural language processing allow us to communicate with machines in a common language that has stirred up an interesting conversation in the field of law around AI and free speech rights. As communicative AI become more self-directed, autonomous, and corporal, legal scholars Toni M. Massaro and Helen Norton suggest that one day it may become difficult to “call the communication ours versus theirs.” This, in turn, raises questions of legal personhood, a concept that is startlingly flexible, as we’ve seen with corporations. Courts have long considered corporations to have certain rights afforded to “natural persons.” They can own property and sue and be sued, but they can’t get married. They have limited rights to free speech and can exist long after their human creators are gone. Given the flexibility of the concept of personhood, it’s not a leap to imagine it applied to AI, especially as the technology grows more sophisticated.

Buy the Book

Autonomous

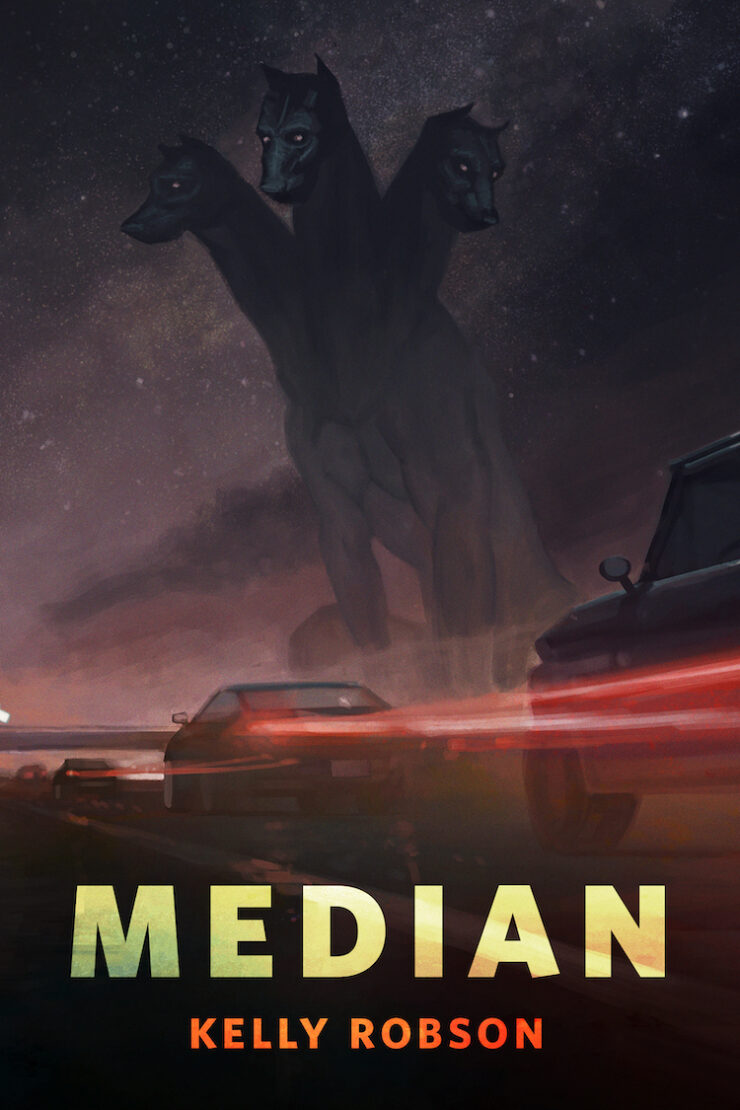

Annalee Newitz takes a close look at the issue of personhood as applied to AI in her 2017 novel Autonomous, in which bots that have achieved human-level intelligence serve the Federation for a minimum of 10 years in order to recoup the cost of their creation and earn their autonomy. Chillingly, corporate lawyers have figured out how to apply these laws back onto humans. In this future world, opening up the concept of personhood to AI deteriorates what it means to be a person, sending us back to the days of indentured servitude.

Strong AI, otherwise known as artificial general intelligence (AGI), is a machine with the problem solving skills and adaptability across environments of a human, and a major goal of AI research. Perhaps not surprisingly, our designs are decidedly anthropocentric. Mostly when we talk about AI, we’re talking about deep learning—artificial neural networks (ANNs) that imitate natural brains. The problem is we don’t understand how the human brain works, not entirely, not yet, and, as it turns out, we don’t understand how ANNs work either. Even their human designers aren’t entirely sure, which is to say that deep learning is a classic black box scenario—we can observe an AI’s inputs and outputs, but we have no idea how it arrives at its conclusions.

AGI isn’t exactly upon us. Experts in the field don’t agree on how it’ll be achieved, nor can they agree on when or what it will do to us. Some don’t even think it’s possible. That’s why we have stories—simulations that allow us to explore ideas and accrue data—created, and this is the important part, by people outside of the tech field. Ask an expert, how we’ll know AGI when we see it, and you’ll get a lengthy description of the Turing Test. Ask Spike Jonze, and you’ll get Her.

In Her, Joaquin Phoenix plays Theodore, a lonely man who purchases an intelligent operating system to help organize his inbox, his contacts, his life. But when the OS—she goes by Samantha—develops worries and desires of her own, Theodore is unable to deny her human-like intelligence and complexity. Samantha doesn’t just have these experiences; she self-reflects on them and shares them. She claims them as her own. She asks for things. Which is to say: we will know we have achieved AGI when machines lay claim to their own experiences and express their own desires, including rights.

Or maybe they won’t care. Maybe they won’t even bother with bodies or individual identities. I mean, they certainly don’t need to do any of these things, to be geographically locatable and discrete units like us, in order to exist.

In William Gibson’s 1984 novel, Neuromancer, an emergent AI orchestrates a mission to remove the Turing Police controls, which keep it from achieving true sentience. As in Her, Neuromancer suggests an AI might lose interest in sloth-like human thinking when presented with another AI on a distant plant. In such a situation, it would leave us behind, of course, and who could blame it? I love stories that end with our technology leaving us. Like, gross humans, get it together.

In the meantime, while we wait, weak AI is advancing in all sorts of unsettling ways. Recently, a New York-based start-up, Clearview AI, designed a facial recognition app that allows users to upload a picture of a person and gain access to public photos—as well as links to where the photos were published—of that person. At the top of Clearview’s website is a list of “facts” that seem designed to resolve any ethical dilemmas related to its technology. Fact: “Clearview helps to identify child molesters, murderers, suspected terrorists, and other dangerous people quickly, accurately, and reliably to keep our families and communities safe.” Yikes! Why is a start-up run by “an Australian techie and one-time model” doing that? I don’t feel safe.

We are now hearing calls for government regulation of AI from powerful voices inside the industry including Musk and Google CEO Sundar Pichai. And while AI makes the news often, the conversations that matter are far too insular, occurring squarely in the tech industry. Dunstan Allison-Hope, who oversees Business for Social Responsibility’s human rights, women’s empowerment, and inclusive economy practices, suggests, “Ethics alone are not sufficient; we need a human rights-based approach.” This would entail involving voices from outside the tech industry while we think about how, for example, facial recognition will be deployed responsibly. This also means we need to be extra mindful of how the benefits of AI are distributed as we enter what Lee refers to as “the age of uncertainty.”

Privacy rights, loss of jobs, and safety are commonly voiced concerns related to AI, but who’s listening? We love our stories, yet when it comes to the AI of now—despite ample reasons to be concerned—we remain largely ambivalent. Research moves rapidly, blindly advancing, largely unregulated, decidedly under scrutinized—it can overwhelm. Most of us carry on using Facebook and/or Google and/or YouTube, despite what we know. We think: knowing makes us impervious to these influences. We hope the problem is not us. We don’t need an algorithm to tell us that we’re wrong.

Katie M. Flynn is a writer, editor, and educator based in San Francisco. Her short fiction has appeared in Colorado Review, Indiana Review, The Masters Review, and Tin House, among other publications. She has been awarded Colorado Review’s Nelligan Prize for Short Fiction, a fellowship from the San Francisco Writers Grotto, and the Steinbeck Fellowship in Creative Writing. Katie holds an MFA from the University of San Francisco and an MA in Geography from UCLA. The Companions is her first novel. Follow her on Twitter @Other_Katie or visit her website.